Kubernetes — Debugging NetworkPolicy (Part 1)

For something as important as NetworkPolicy, debugging is surprisingly painful. There’s even a section in the documentation that is devoted to what you can’t do with network policies, and some of the items in that section seem to me to be a bit mind-boggling. For example, you can’t:

- target services by name (although you can target pods or namespaces by their labels)

- create default policies which are applied to all namespaces and pods (there are, however, some third-party Kubernetes distributions and network plugins which can do this)

- and in my mind MOST disappointing/shocking, there is no direct log of network security events (for example connections that are blocked or accepted)

There are also some significant features that are not listed in the document above that you might incorrectly be hoping were available. For example, you can’t block/allow based on DNS name (and for endpoints not in your cluster, you’ll have to use subnet/CIDR). With any luck, there is a v2 of NetworkPolicy at some point…

Various Kubernetes distributions include full or partial solutions to these problems (GKE, OpenShift, etc.), or you might be able to install your own network plugin (Project Calico, Cilium, etc.). Unfortunately, these solutions are all different. Different ways to enable features and configure, different commands to run to obtain audit/diagnostic information, different capabilities, etc.

Notwithstanding the challenges, however, NetworkPolicy is still incredibly essential. If you are having difficulties with your NetworkPolicies, hopefully the following ideas will help you start using them successfully.

Do you have a network plugin enabled that supports NetworkPolicy?

If your problem is that traffic is allowed that should be blocked by your NetworkPolicies, you may simply not be using a network plugin that supports NetworkPolicy. If your cluster does not have a suitable network plugin, NetworkPolicy resources will have no effect. For example, at the time this article was written, Docker Desktop’s default network plugin does not support NetworkPolicy. You will be able to deploy without any apparent errors, the NetworkPolicies just won’t do anything.

I’ve never tried to install a non-default network plugin on Docker Desktop, but installing Calico on Minikube is very easy. Non-local Kubernetes instances such as EKS, AKS, OpenShift, GKE, Anthos, etc. usually already have a network plugin that supports NetworkPolicy, or can be easily configured to enable that support, and so a bad network plugin is usually not a problem in these environments.

If you don’t know what network plugin your cluster is using, the easiest way to determine if NetworkPolicy is supported is to remove all NetworkPolicies from a test namespace and deploy a Pod. Exec into a container in that Pod, and try to make a connection to anything or even simply do a DNS resolution.

You can use any command that you have available in your container, for example ping, wget, curl, etc. If you don’t have anything handy, you could even use bash redirection (at least for tcp connections), for example:

# If the NetworkPolicies allow requests to example.com on

# port 80 (and allow connections to the Kubernetes DNS server

# on port 53 for name resolution), the command should quickly

# print "open"

bash-5.1# </dev/tcp/example.com/80 && echo open || echo closed

openIf using bash redirection, don’t forget to include the starting < in your command. Once you have a working test case, deploy a “default-deny-all” policy:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: default-deny-all

namespace: my-ns

spec:

podSelector: {}

policyTypes:

- Ingress

- EgressRe-run your test case. If you now start seeing an error then you know that your NetworkPolicy is blocking traffic — and therefore that NetworkPolicies are supported by your network plugin:

# If connectivity is blocked, the command will "hang" for a while

# before printing "closed". Because the default-deny-all policy

# blocks DNS resolution, the delay was only about 5 seconds

# in my testing and an "Invalid argument" error also produced

bash-5.1# </dev/tcp/example.com/80 && echo open || echo closed

bash: example.com: Try again

bash: /dev/tcp/example.com/80: Invalid argument

closedIf instead of seeing “closed” you still see “open” after deploying the default-deny-all NetworkPolicy, your Kubernetes cluster does not support NetworkPolicy, and you’ll need to change your network plugin (or change your Kubernetes distribution, I suppose). You may need to engage your cluster administrator and/or the group that owns the software distribution package if you are in a corporate environment.

Make sure the problem is actually caused by your NetworkPolicy

If your problem is that traffic is being blocked that should be allowed, make sure that the issue isn’t being caused by something else. Your Pod might be trying to make a connection to (or receive a connection from) an external resource that is behind its own firewall. Try removing all NetworkPolicies temporarily from your Namespace and retry the test case.

If the application now works, you’ll know the issue is in fact a NetworkPolicy problem. If it still doesn’t work, you’ll know the issue is likely something other than NetworkPolicy. However, be aware that some network plugins support NetworkPolicy that is global. Calico, for example, implements GlobalNetworkPolicy. Global network policies are typically non-namespaced objects, and you may need to ask your cluster administrator for assistance in determining whether your connections are being blocked by a global policy.

Reread the documentation and double-check your work and assumptions

So you’ve confirmed that your cluster supports NetworkPolicy, and also that it is your NetworkPolicy that is incorrectly blocking (or incorrectly allowing) the traffic. Now would be a good time to reread the documentation.

After rereading the documentation, double-check that your selectors actually match the labels on your Pods or Namespaces as applicable. Also make sure that you haven’t combined podSelector and namespaceSelectors in incorrect ways.

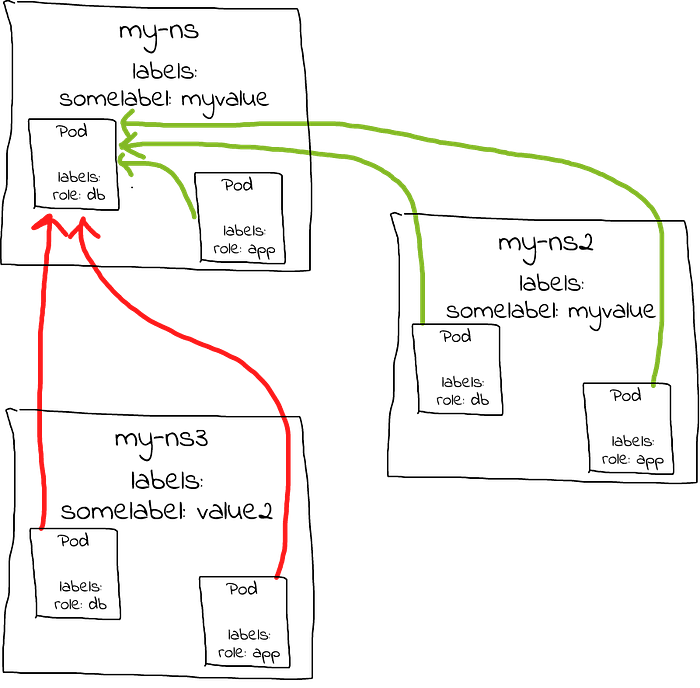

For example, the following NetworkPolicy allows traffic from all Pods in the my-ns namespace that have a role: app label OR from all Pods in any namespaces that have a somelabel: value label:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: test-networkpolicy1

namespace: my-ns

spec:

podSelector:

matchLabels:

role: db

policyTypes:

- Ingress

ingress:

- from:

- namespaceSelector:

matchLabels:

somelabel: myvalue

- podSelector:

matchLabels:

role: app

ports:

- protocol: TCP

port: 80For example, if there were 3 namespaces and Pods as shown below, the connections in green would be allowed but the connections in red would be blocked:

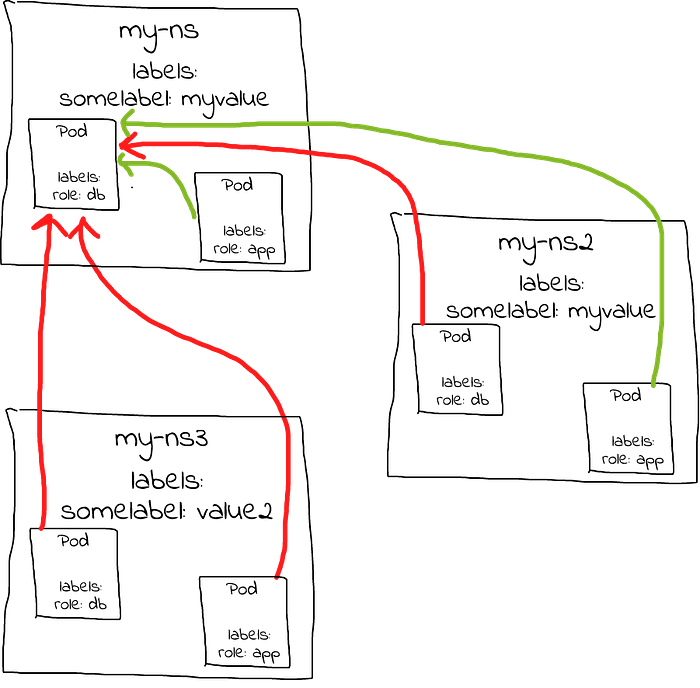

However, a slightly different NetworkPolicy would allow traffic only from Pods that themselves have a label role: frontend AND that are in namespaces that have a somelabel: myvalue label:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: test-networkpolicy2

namespace: my-ns

spec:

podSelector:

matchLabels:

role: db

policyTypes:

- Ingress

ingress:

- from:

- namespaceSelector:

matchLabels:

somelabel: myvalue

podSelector:

matchLabels:

role: app

ports:

- protocol: TCP

port: 80Continuing with the same example above, deploying this second NetworkPolicy instead of the first would have the following result:

The absence of a single hyphen meaningfully changes the results of the NetworkPolicy…

Ensure That Namespace Labelling is Controlled/Governed

Unless you control all of the namespaces in your cluster, you should also make sure that you only use Namespace labels that can’t be defined arbitrarily by other untrusted users of your cluster. If you allow traffic from Namespaces with the label somelabel: myvalue but anyone can cause their namespace to be labelled with any label they wish, your NetworkPolicy may be essentially useless.

Starting with v1.21 beta, the Kubernetes control plane will set an immutable label kubernetes.io/metadata.name on all namespaces, provided that the NamespaceDefaultLabelName feature gate is enabled. The value of the label is the namespace name. If you are not sure whether your namespaces are labelled, try describing a Namespace:

>kubectl describe ns my-client-ns

Name: my-client-ns

Labels: app.kubernetes.io/name=my-deployment-basic

kubernetes.io/metadata.name=my-client-ns

Annotations: <none>

Status: ActiveIf you don’t have this feature gate enabled, or are on a version before v1.21, I recommend creating this label yourself on all of your Namespaces (if you don’t have access to patch Namespaces yourself, talk to your cluster administrator).

Example NetworkPolicy

If your Namespace is labelled as described above, you can specify a NetworkPolicy similar to the following:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: test-network-policy

namespace: my-app-ns

spec:

podSelector:

matchLabels:

role: db

policyTypes:

- Ingress

- Egress

ingress:

- from:

- ipBlock:

cidr: 172.17.0.0/16

except:

- 172.17.1.0/24

- namespaceSelector:

matchLabels:

kubernetes.io/metadata.name: my-client-ns

podSelector:

matchLabels:

role: frontend

ports:

- protocol: TCP

port: 6379

egress:

- to:

- ipBlock:

cidr: 10.0.0.0/24

ports:

- protocol: TCP

port: 5978This NetworkPolicy essentially says:

for all Pods p1 in Namespace my-app-ns do if Pod p1 has label "role: db" DENY both ingress & egress traffic without a subsequent ALLOW if ingress (inbound) connection if connection from Pod p2 in my-client-ns

if Pod p2 has label "role: frontend"

ALLOW

break

end if else if connection from IP 172.17.0.0–172.17.255.255

if connection not from IP 172.17.1.0 - 172.17.1.255

ALLOW

break

end if else if egress (outbound) connection if connection to 10.0.0.0 - 10.0.0.255

ALLOW

break

end if end if end ifdone

I hope this has helped you understand NetworkPolicy a little better as well as provided you some tips when you need to figure out why they aren’t working as you had intended.

Part 2 of this series is now available, discussing which side of the conversation to debug from (if you can), as well as describing a few common scenarios where NetworkPolicy can be blocking traffic as well as provide examples of NetworkPolicy objects that can be implemented to allow that traffic, so please check it out when you get a chance!

Join FAUN: Website 💻|Podcast 🎙️|Twitter 🐦|Facebook 👥|Instagram 📷|Facebook Group 🗣️|Linkedin Group 💬| Slack 📱|Cloud Native News 📰|More.

If this post was helpful, please click the clap 👏 button below a few times to show your support for the author 👇